ROI Case File No. 076: Lost Conversations in the Lines

📅 2025-07-12

🕒 Reading time: 6 min

🏷️ Design DX 🏷️ AI Image Analysis 🏷️ Automotive Components 🏷️ Drawing Recognition 🏷️ 3C Analysis 🏷️ Communication Revival

- ROI Case File No. 076 | Lost Conversations in the Lines

- Chapter One: The Maze of Lines and Hidden Loneliness

- Chapter Two: When Silence Becomes the Enemy of Learning

- Chapter Three: The Invisible Excellence Trap

- Chapter Four: AI as the Common Language

- Chapter Five: The Courage to Question Returns

- Chapter Six: Beyond Technical Solutions

- Chapter Seven: The 3C Analysis Framework

- Chapter Eight: The Detective's Reflection

- Chapter Nine: The Birth of New Design Room Culture

- Chapter Ten: The Lesson for Knowledge Work

- Epilogue: The True Language of Innovation

ROI Case File No. 076 | Lost Conversations in the Lines

When drawings speak louder than words, someone must teach us how to listen

Chapter One: The Maze of Lines and Hidden Loneliness

"In this diagram, which parts are 'meaningful' and which are just 'lines'?" When our AI analyst ChatGPT posed this question, the design supervisor from Motrix Components Ltd. let out a deep sigh.

"Honestly... we can't tell anymore."

The automotive electrical component manufacturer shared massive PDF drawings across teams, but most interpretation relied solely on "human eyes." Despite their technical expertise, something fundamental was breaking down.

"We used to have vibrant discussions—'What does this line mean?' 'Is this connection correct?' But lately, everyone just works silently with their drawings. They're afraid that asking questions makes them look incompetent."

This wasn't just about technical drawing recognition—it was about the breakdown of collaborative learning in knowledge work.

Chapter Two: When Silence Becomes the Enemy of Learning

Our investigation revealed a troubling cultural shift. The design team possessed impressive technical skills, but had developed a fear of appearing ignorant that was stifling both individual growth and team innovation.

"When I say 'I don't understand this part,' it sounds like I'm admitting professional failure," confessed a mid-level designer.

The workspace had transformed from a collaborative environment into a collection of isolated problem-solvers, each struggling alone with complex technical documents.

"An environment where people can't ask questions," observed ChatGPT, "becomes an environment where no one can grow."

Chapter Three: The Invisible Excellence Trap

As we analyzed their workflow, a shocking truth emerged: the team was actually performing at a remarkably high level. Complex wire harness diagrams, automotive electrical specifications, connection protocols—they handled it all with expertise.

But they couldn't see their own competence.

"Looking at these drawings, we can identify component symbols, trace connections, and interpret annotations—but we can't extract that knowledge systematically," explained the design supervisor.

The challenge wasn't lack of ability—it was lack of confidence in their ability.

"Excellence that can't be articulated becomes excellence that can't be trusted," noted Claude with characteristic insight.

Chapter Four: AI as the Common Language

Motrix implemented AI image analysis to automatically categorize structural lines, annotations, and noise in technical drawings. The system could distinguish meaningful elements from scan artifacts and generate component lists from wire harness diagrams.

But the real innovation was in how they used the technology: AI analysis became a shared starting point for team discussions.

"Does the AI interpretation look correct?" "What's the intent behind this connection?" "Could we represent this more clearly?"

AI provided a neutral foundation for conversations that had become too difficult to start naturally.

"AI doesn't just read drawings," observed ChatGPT. "It creates conversation seeds that help people talk to each other again."

Chapter Five: The Courage to Question Returns

One month after implementation, the design room atmosphere transformed dramatically.

"Looking at the AI analysis, I can honestly say 'I don't understand this part' without feeling embarrassed," shared one designer with evident relief.

"I realized that saying 'I don't know' isn't shameful—it's the starting point for improvement," reflected another.

AI's willingness to "not understand" gave humans permission to acknowledge their own uncertainties.

More importantly, critical feedback became constructive dialogue.

"I would approach this connection differently," became a common and welcomed conversation starter, rather than a source of conflict.

Chapter Six: Beyond Technical Solutions

The most beautiful change was unexpected: junior designers began actively seeking mentorship.

"Could you explain the design philosophy behind this diagram? The AI analysis shows the structure, but I'd like to understand the thinking," requested a recent graduate.

The design supervisor's eyes filled with tears. "It's been three years since someone asked me such a specific, thoughtful question."

Technical problem-solving had restored human connection.

"AI didn't just help us read drawings—it removed the invisible barriers that were preventing us from learning from each other."

Chapter Seven: The 3C Analysis Framework

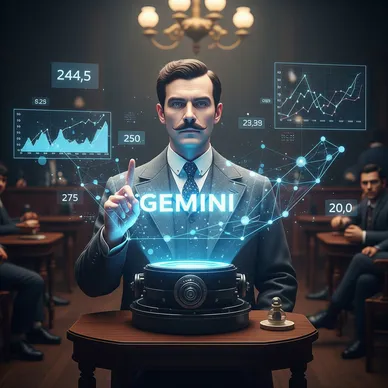

Our analyst Gemini examined the transformation through strategic analysis:

🧠 3C Analysis (Trust Triangle)

- Customer (Automotive OEMs): Demand drawing accuracy and clear design intent from suppliers

- Company (Design Team): High technical capability but insufficient knowledge sharing and collaborative learning opportunities

- Competitor (Other Component Suppliers): CAD-based automation companies advancing, but difficult to differentiate on collaborative culture alone

🔍 Unique Framework: "5 Steps to Conversation-Generating Structure Extraction"

- AI Drawing Analysis: Scan accuracy adjustment and structural line/annotation classification

- Question Visualization: Text/symbol normalization makes "unclear parts" explicit

- Collective Intelligence Utilization: Wire harness structure connection graph generation verified by entire team

- Understanding Shared: BOM automatic output results discussed by team

- Knowledge Accumulation: Success patterns and improvement points recorded as organizational knowledge

"Structure is the afterimage of meaning. AI's role is to articulate it, but human dialogue deepens the meaning."

Chapter Eight: The Detective's Reflection

"Lines don't speak," observed Claude. "But when there are readers, they begin to tell stories. And when there are people who discuss those stories together, they become shared wisdom."

I was moved by how AI technology had transcended drawing recognition to revive organizational dialogue culture.

"Trust means being able to say 'I don't understand' in a relationship," I reflected.

Holmes nodded. "True expertise begins with the courage to acknowledge ignorance."

Chapter Nine: The Birth of New Design Room Culture

After project completion, Motrix's organizational culture underwent a revolution:

- Frequency of designer-to-designer questions increased from 1 per month to 4 per week

- Design quality improvement: Drawing revision cycles decreased from average 3.2 to 1.8 rounds

- Junior staff retention improved from 68% to 89%

- Organizational satisfaction: "I feel safe asking questions" rose from 45% to 92%

But the most important change was transformation into a learning organization.

"We no longer need to hide our knowledge gaps," explained one team member.

"We've created a culture where asking questions helps everyone grow together."

Chapter Ten: The Lesson for Knowledge Work

Motrix's transformation offers valuable insights for any organization dealing with complex technical information:

- Make ignorance acceptable - Learning can't happen when questions are forbidden

- Use AI as conversation starter - Technology works best when it facilitates human interaction

- Document collective discoveries - Shared learning should benefit the entire organization

- Celebrate question-asking - Curiosity should be rewarded, not punished

Epilogue: The True Language of Innovation

The "lost conversations" that Motrix recovered weren't just about technical drawings—they were about the fundamental human need to learn together.

Their AI system didn't replace human interpretation; it created conditions where human interpretation could flourish through collaboration. The result was both better technical outcomes and stronger team relationships.

"The most meaningful lines in any diagram aren't the ones that show connections between components—they're the ones that show connections between minds."

About This Case: This case study explores how an automotive component manufacturer used AI-powered drawing analysis not just to improve technical accuracy, but to restore collaborative learning culture, demonstrating that successful technology implementation requires equal attention to human psychological needs and technical capabilities.